Apple are working on a product that will allow users to edit photos through just text prompts. Rather than needing to learn any editing skills, they will be able to write their commands and see them happen.

Apple has introduced a groundbreaking model that allows users to describe what changes they want to make to a photo using simple language, without needing to use complex photo editing software. The service allows text prompts rather than any actual work.

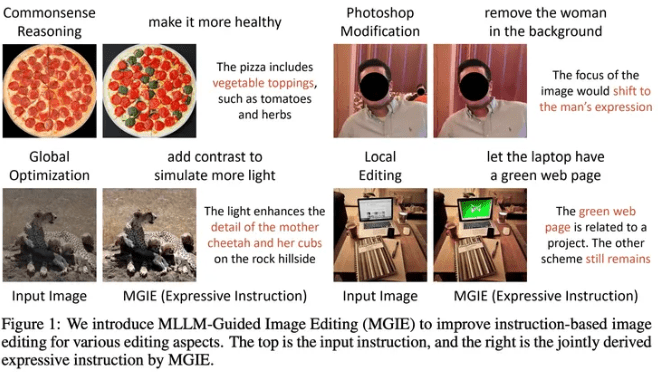

The model, called MGIE (MLLM-Guided Image Editing), was developed in collaboration with the University of California, Santa Barbara. With MGIE, users can perform various editing tasks such as cropping, resizing, flipping, and adding filters to images, all through text prompts.

Whether it’s a simple task like adjusting the brightness or a more complex one like modifying specific objects in a photo, MGIE can handle it. The model works by interpreting user prompts and then “imagining” the desired edits. You can ask for whatever you like.

Add or remove parts of an image

For example, asking for a bluer sky in a photo would result in the brightness of the sky portion being adjusted accordingly. Using MGIE is as easy as typing out what you want to change about the picture. The software can add or remove items to an image.

For instance, typing “make it healthier” while editing an image of a pepperoni pizza could add vegetable toppings. Similarly, instructing the model to “add more contrast to simulate more light” can make a dark photo appear brighter, like a picture of tigers in the Sahara.

The researchers behind MGIE emphasise that the model provides explicit visual-aware intentions, leading to reasonable image editing results. They believe that this approach could contribute to future advancements in vision-and-language research.

Apple has made MGIE available for download on GitHub and has also released a web demo on Hugging Face Spaces. However, the company has not disclosed its plans for the model beyond research purposes. We’ll have to wait and see.

While other platforms like OpenAI’s DALL-E 3 and Adobe’s Firefly AI offer similar capabilities, Apple’s entry into the generative AI space signifies its commitment to incorporating more AI features into its devices. With CEO Tim Cook expressing the company’s interest in expanding.